Création des Logiciels de gestion d'Entreprise, Création et référencement des sites web, Réseaux et Maintenance, Conception

Création des Logiciels de gestion d'Entreprise, Création et référencement des sites web, Réseaux et Maintenance, Conception

To make this data even more useful, we have divided the world of links into two types: external and internal. Let's understand what kind of links fall into which bucket.

What are external links?

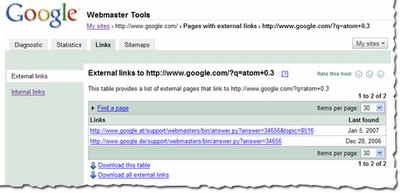

External links to your site are the links that reside on pages that do not belong to your domain. For example, if you are viewing links for http://www.google.com/, all the links that do not originate from pages on any subdomain of google.com would appear as external links to your site.

What are internal links?

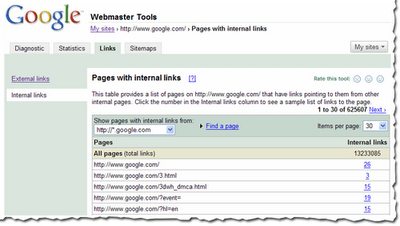

Internal links to your site are the links that reside on pages that belong to your domain. For example, if you are viewing links for http://www.google.com/, all the links that originate from pages on any subdomain of google.com, such as http://www.google.com/ or mobile.google.com, would appear as internal links to your site.

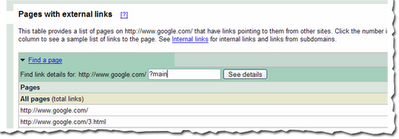

Viewing links to a page on your site

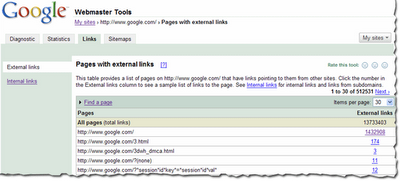

You can view the links to your site by selecting a verified site in your webmaster tools account and clicking on the new Links tab at the top. Once there, you will see the two options on the left: external links and internal links, with the external links view selected. You will also see a table that lists pages on your site, as shown below. The first column of the table lists pages of your site with links to them, and the second column shows the number of the external links to that page that we have available to show you. (Note that this may not be 100% of the external links to this page.)

However, this view also provides you with a way to filter the data further: to see links from any of the subdomain on the domain, or links from just the specific subdomain you are currently viewing. For example, if you are currently viewing the internal links to http://www.google.com/, you can either see links from all the subdomains, such as links from http://mobile.google.com/ and http://www.google.com, or you can see links only from other pages on http://www.google.com.

However, this view also provides you with a way to filter the data further: to see links from any of the subdomain on the domain, or links from just the specific subdomain you are currently viewing. For example, if you are currently viewing the internal links to http://www.google.com/, you can either see links from all the subdomains, such as links from http://mobile.google.com/ and http://www.google.com, or you can see links only from other pages on http://www.google.com.

.hrecipe{font:10px oswald;} #hrecipe-1{float:letf; width:100px; padding-height:5px} #hrecipe-2{float:right; width: auto;}The Second Step

<div itemscope="itemtype="http://data-vocabulary.org/Recipe"></div>

<span itemprop='itemreviewed'><span itemprop='description'><h3 class='post-title entry-title' itemprop='name'>

<span itemprop='itemreviewed'><span itemprop='description'>

<h3 class='post-title entry-title' itemprop='name'>

<b:if cond='data:post.link'><a expr:href='data:post.link'><data:post.title/></a>

<b:else/>

<b:if cond='data:post.url'><a expr:href='data:post.url'><data:post.title/></a>

<b:else/>

<data:post.title/>

</b:if>

</b:if>

</h3>

</span>

</span>

<div class='hrecipe'>If all is applied, check the result in the best hxxp://www.google.com/webmasters/tools/richsnippets

<div id='hrecipe-1'>

<img class='photo' expr:alt='data:post.title' expr:src='data:post.thumbnailUrl'/></div>

<div id='hrecipe-2'>

<span class='item'>

<span class='fn'>dns.kardian

</span>

</span><br/>

By <span class='author'><b><data:blog.title/></b></span><br/>

Published: <span class='published'><data:post.timestampISO8601/></span><br/>

<span class='summary'><data:post.title/></span><br/>

<span class='review hreview-aggregate'>

<span class='rating'>

<span class='average'>4.5</span>

<span class='count'>11</span>

reviews</span></span>

</div>

</div>

Tips make Blog SEO Friendly-For the blogger I will give you tips and tricks to make the blog into seo friendly. Beriktu Tips based on my experience and never done before and in my opinion proved to be influential improve blog traffic.

Tips make Blog SEO Friendly-For the blogger I will give you tips and tricks to make the blog into seo friendly. Beriktu Tips based on my experience and never done before and in my opinion proved to be influential improve blog traffic. Update: David actually used a brush, not a pen. We thought adding a thumbnail of his work would help him forgive our mistake :)

Update: David actually used a brush, not a pen. We thought adding a thumbnail of his work would help him forgive our mistake :)  |

| Home Page |

|

| Single Page / Post Page |

.post { margin:.5em 0 1.5em; border-bottom:1px dotted $bordercolor; padding-bottom:1.5em; }

.post {

background: url(http://i36.tinypic.com/xqid55.jpg);

background-repeat: no-repeat;

background-position: bottom center;

margin:.5em 0 1.5em;

border-bottom:0px dotted $bordercolor;

padding-bottom:3.6em;

}

<div class='post-footer-line post-footer-line-3'/>

<center><img height='30' src='divider image url'/></center>Tip:- If you want to increase the Divider height Change 30 to the Desired value,and Add Divider image url in the script and that's it!

| Google Page Rank update 2013 |

|

| Img.Credit:freedigitalphotos |