|

Seo Master present to you:

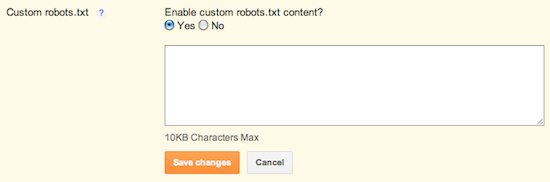

However, keep in mind that other sites may have linked to the pages that you’ve decided to restrict. Further, Google may index your page if we discover it by following a link from someone else's site. To display it in search results, Google will need to display a title of some kind and because we won't have access to any of your page content, we will rely on off-page content such as anchor text from other sites. (To truly block a URL from being indexed, you can use meta tags.) To exclude certain content from being searched, go to Settings | Search Preferences and click Edit next to "Custom robots.txt." Enter the content which you would like web robots to ignore. For example: User-agent: * Disallow: /about You can also read about robot.txt on this post on the Google Webmaster’s blog. Warning! Use with caution. Incorrect use of this feature can result in your blog being ignored by search engines. |

Labels: seo